(This post was largely translated from our Japanese Webmaster Central Blog.)It seems the world is going mobile, with many people using mobile phones on a daily basis, and a large user base searching on Google’s mobile search page. However, as a webmaster, running a mobile site and tapping into the mobile search audience isn’t easy. Mobile sites not only use a different format from normal desktop site, but the management methods and expertise required are also quite different. This results in a variety of new challenges. As a mobile search engineer, it’s clear to me that while many mobile sites were designed with mobile viewing in mind, they weren’t designed to be search friendly. I’d like to help ensure that your mobile site is also available for users of mobile search.Here are troubleshooting tips to help ensure that your site is properly crawled and indexed:Verify that your mobile site is indexed by GoogleIf your web site doesn’t show up in the results of a Google mobile search even using the ‘site:’ operator, it may be that your site has one or both of the following issues:Googlebot may not be able to find your site

Googlebot, our crawler, must crawl your site before it can be included in our search index. If you just created the site, we may not yet be aware of it. If that’s the case, create a Mobile Sitemap and submit it to Google to inform us to the site’s existence. A Mobile Sitemap can be submitted using Google Webmaster Tools, in the same way as with a standard Sitemap.Googlebot may not be able to access your site

Some mobile sites refuse access to anything but mobile phones, making it impossible for Googlebot to access the site, and therefore making the site unsearchable. Our crawler for mobile sites is “Googlebot-Mobile”. If you’d like your site crawled, please allow any User-agent including “Googlebot-Mobile” to access your site. You should also be aware that Google may change its User-agent information at any time without notice, so it is not recommended that you check if the User-agent exactly matches “Googlebot-Mobile” (which is the string used at present). Instead, check whether the User-agent header contains the string “Googlebot-Mobile”. You can also use DNS Lookups to verify Googlebot.Verify that Google can recognize your mobile URLsOnce Googlebot-Mobile crawls your URLs, we then check for whether the URL is viewable on a mobile device. Pages we determine aren’t viewable on a mobile phone won’t be included in our mobile site index (although they may be included in the regular web index). This determination is based on a variety of factors, one of which is the “DTD (Doc Type Definition)” declaration. Check that your mobile-friendly URLs’ DTD declaration is in an appropriate mobile format such as XHTML Mobile or Compact HTML. If it’s in a compatible format, the page is eligible for the mobile search index. For more information, see the Mobile Webmaster Guidelines.If you have any question regarding mobile site, post your question to our Webmaster Help Forum and webmasters around the world as well as we are happy to help you with your problem.

Running desktop and mobile versions of your site

(This post was largely translated from our Japanese version of the Webmaster Central Blog )

Recently I introduced several methods to ensure your mobile site is properly indexed by Google. Today I’d like to share information useful for webmasters who manage both desktop and mobile phone versions of a site.

One of the most common problems for webmasters who run both mobile and desktop versions of a site is that the mobile version of the site appears for users on a desktop computer, or that the desktop version of the site appears when someone finds them from a mobile device. In dealing with this scenario, here are two viable options:

Redirect mobile users to the correct version

When a mobile user or crawler (like Googlebot-Mobile) accesses the desktop version of a URL, you can redirect them to the corresponding mobile version of the same page. Google notices the relationship between the two versions of the URL and displays the standard version for searches from desktops and the mobile version for mobile searches.

If you redirect users, please make sure that the content on the corresponding mobile/desktop URL matches as closely as possible. For example, if you run a shopping site and there’s an access from a mobile phone to a desktop-version URL, make sure that the user is redirected to the mobile version of the page for the same product, and not to the homepage of the mobile version of the site. We occasionally find sites using this kind of redirect in an attempt to boost their search rankings, but this practice only results in a negative user experience, and so should be avoided at all costs.

On the other hand, when there’s an access to a mobile-version URL from a desktop browser or by our web crawler, Googlebot, it’s not necessary to redirect them to the desktop-version. For instance, Google doesn’t automatically redirect desktop users from their mobile site to their desktop site, instead they include a link on the mobile-version page to the desktop version. These links are especially helpful when a mobile site doesn’t provide the full functionality of the desktop version — users can easily navigate to the desktop-version if they prefer.

Switch content based on User-agentSome sites have the same URL for both desktop and mobile content, but change their format according to User-agent. In other words, both mobile users and desktop users access the same URL (i.e. no redirects), but the content/format changes slightly according to the User-agent. In this case, the same URL will appear for both mobile search and desktop search, and desktop users can see a desktop version of the content while mobile users can see a mobile version of the content.

However, note that if you fail to configure your site correctly, your site could be considered to be cloaking, which can lead to your site disappearing from our search results. Cloaking refers to an attempt to boost search result rankings by serving different content to Googlebot than to regular users. This causes problems such as less relevant results (pages appear in search results even though their content is actually unrelated to what users see/want), so we take cloaking very seriously.

So what does “the page that the user sees” mean if you provide both versions with a URL? As I mentioned in the previous post, Google uses “Googlebot” for web search and “Googlebot-Mobile” for mobile search. To remain within our guidelines, you should serve the same content to Googlebot as a typical desktop user would see, and the same content to Googlebot-Mobile as you would to the browser on a typical mobile device. It’s fine if the contents for Googlebot are different from the one for Googlebot-Mobile.

One example of how you could be unintentionally detected for cloaking is if your site returns a message like “Please access from mobile phones” to desktop browsers, but then returns a full mobile version to both crawlers (so Googlebot receives the mobile version). In this case, the page which web search users see (e.g. “Please access from mobile phones”) is different from the page which Googlebot crawls (e.g. “Welcome to my site”). Again, we detect cloaking because we want to serve users the same relevant content that Googlebot or Googlebot-Mobile crawled.

Diagram of serving content from your mobile-enabled site

We’re working on a daily basis to improve search results and solve problems, but because the relationship between PC and mobile versions of a web site can be nuanced, we appreciate the cooperation of webmasters. Your help will result in more mobile content being indexed by Google, improving the search results provided to users. Thank you for your cooperation in improving the mobile search user experience.

Reference from: by Jun Mukai, Software Engineer, Mobile Search Team in Google Webmaster Central Blog

Verifying a Blogger blog in Webmaster Tools

You may have seen our recent announcement of changes to the verification system in Webmaster Tools. One side effect of this change is that blogs hosted on Blogger (that haven’t yet been verified) will have to use the meta tag verification method rather than the “one-click” integration from the Blogger dashboard. The “Webmaster Tools” auto-verification link from the Blogger dashboard is no longer working and will soon be removed. We’re working to reinstate an automated verification approach for Blogger hosted blogs in the future, but for the time being we wanted you to be aware of the steps required to verify your Blogger blog in Webmaster Tools.

Step-By-Step Instructions:

In Webmaster Tools

1. Click the “Add a site” button on the Webmaster Tools Home page

2. Enter your blog’s URL (for example, googlewebmastercentral.blogspot.com) and click the “Continue” button to go to the Manage verification page

3. Select the “Meta tag” verification method and copy the meta tag providedIn Blogger

4. Go to your blog and sign in

5. From the Blogger dashboard click the “Layout” link for the blog you’re verifying

6. Click the “Edit HTML” link under the “Layout” tab which will allow you to edit the HTML for your blog’s template

7. Paste the meta tag (copied in step 3) immediately after the <head> element within the template HTML and click the “SAVE TEMPLATE” buttonIn Webmaster Tools

8. On the Manage Verification page, confirm that “Meta tag” is selected as the verification method and click the “Verify” buttonYour blog should now be verified. You’re ready to start using Webmaster Tools!

Posted by Jonathan Simon, Webmaster Trends Analyst

New parameter handling tool helps with duplicate content issues

Duplicate content has been a hot topic among webmasters and our blog for over three years. One of our first posts on the subject came out in December of ’06, and our most recent post was last week. Over the past three years, we’ve been providing tools and tips to help webmasters control which URLs we crawl and index, including a) use of 301 redirects, b) www vs. non-www preferred domain setting, c) change of address option, and d) rel=”canonical”.We’re happy to announce another feature to assist with managing duplicate content: parameter handling. Parameter handling allows you to view which parameters Google believes should be ignored or not ignored at crawl time, and to overwrite our suggestions if necessary.Let’s take our old example of a site selling Swedish fish. Imagine that your preferred version of the URL and its content looks like this:http://www.example.com/product.php?item=swedish-fishHowever, you may also serve the same content on different URLs depending on how the user navigates around your site, or your content management system may embed parameters such as sessionid:http://www.example.com/product.php?item=swedish-fish&category=gummy-candyhttp://www.example.com/product.php?item=swedish-fish&trackingid=1234&sessionid=5678With the “Parameter Handling” setting, you can now provide suggestions to our crawler to ignore the parameters category, trackingid, and sessionid. If we take your suggestion into account, the net result will be a more efficient crawl of your site, and fewer duplicate URLs.Since we launched the feature, here are some popular questions that have come up:Are the suggestions provided a hint or a directive?Your suggestions are considered hints. We’ll do our best to take them into account; however, there may be cases when the provided suggestions may do more harm than good for a site.When do I use parameter handling vs rel=”canonical”?rel=”canonical” is a great tool to manage duplicate content issues, and has had huge adoption. The differences between the two options are:

- rel=”canonical” has to be put on each page, whereas parameter handling is set at the host level

- rel=”canonical” is respected by many search engines, whereas parameter handling suggestions are only provided to Google

Use which option works best for you; it’s fine to use both if you want to be very thorough.As always, your feedback on our new feature is appreciated.

Reunifying duplicate content on your website

Tuesday, October 06, 2009 at 3:14 PM

Handling duplicate content within your own website can be a big challenge. Websites grow; features get added, changed and removed; content comes—content goes. Over time, many websites collect systematic cruft in the form of multiple URLs that return the same contents. Having duplicate content on your website is generally not problematic, though it can make it harder for search engines to crawl and index the content. Also, PageRank and similar information found via incoming links can get diffused across pages we aren’t currently recognizing as duplicates, potentially making your preferred version of the page rank lower in Google.

Steps for dealing with duplicate content within your website

- Recognize duplicate content on your website.

The first and most important step is to recognize duplicate content on your website. A simple way to do this is to take a unique text snippet from a page and to search for it, limiting the results to pages from your own website by using a site:query in Google. Multiple results for the same content show duplication you can investigate.- Determine your preferred URLs.

Before fixing duplicate content issues, you’ll have to determine your preferred URL structure. Which URL would you prefer to use for that piece of content?- Be consistent within your website.

Once you’ve chosen your preferred URLs, make sure to use them in all possible locations within your website (including in your Sitemap file).- Apply 301 permanent redirects where necessary and possible.

If you can, redirect duplicate URLs to your preferred URLs using a 301 response code. This helps users and search engines find your preferred URLs should they visit the duplicate URLs. If your site is available on several domain names, pick one and use the 301 redirect appropriately from the others, making sure to forward to the right specific page, not just the root of the domain. If you support both www and non-www host names, pick one, use the preferred domain setting in Webmaster Tools, and redirect appropriately.- Implement the rel=”canonical” link element on your pages where you can.

Where 301 redirects are not possible, the rel=”canonical” link element can give us a better understanding of your site and of your preferred URLs. The use of this link element is also supported by major search engines such as Ask.com, Bing and Yahoo!.- Use the URL parameter handling tool in Google Webmaster Tools where possible.

If some or all of your website’s duplicate content comes from URLs with query parameters, this tool can help you to notify us of important and irrelevant parameters within your URLs. More information about this tool can be found in our announcement blog post.What about the robots.txt file?

One item which is missing from this list is disallowing crawling of duplicate content with your robots.txt file. We now recommend not blocking access to duplicate content on your website, whether with a robots.txt file or other methods. Instead, use the rel=”canonical” link element, the URL parameter handling tool, or 301 redirects. If access to duplicate content is entirely blocked, search engines effectively have to treat those URLs as separate, unique pages since they cannot know that they’re actually just different URLs for the same content. A better solution is to allow them to be crawled, but clearly mark them as duplicate using one of our recommended methods. If you allow us to crawl these URLs, Googlebot will learn rules to identify duplicates just by looking at the URL and should largely avoid unnecessary recrawls in any case. In cases where duplicate content still leads to us crawling too much of your website, you can also adjust the crawl rate setting in Webmaster Tools.

We hope these methods will help you to master the duplicate content on your website! Information about duplicate content in general can also be found in our Help Center. Should you have any questions, feel free to join the discussion in our Webmaster Help Forum.

Reference from: http://googlewebmastercentral.blogspot.com/2009/10/reunifying-duplicate-content-on-your.html

SEO – Common Tag 通用標籤與搜尋引擎優化

搜尋引擎的最主要的終極任務就是能夠「讀」網路上的資料, 如此才能真正處理資料的意義, Google跟Yahoo等業者已經早就能夠處理RDF的資料標籤, 這幾天Yahoo又宣佈支援Common Tag … 這些轉變在SEO代表什麼? 會影響SEO哪些作業?

什麼是RDF(Resource Description Framework)? RDF就是一種描述資料的模式, 比如我們說:「王先生是一位藝術家」, 這個句子就包括三個元素:「王先生」、「是一位」、「藝術家」

「王先生」是主詞(subject), 「是一位」是述詞(predicate), 「藝術家」是受詞(Object)

如果我們也說:「李先生是一位藝術家」, 則「王先生」與「李先生」在「是一位」這個述詞之下有相同的屬性, 白話來說就是「他們都是藝術家」啦!

如果資料具有描述上類的結構, 搜尋引擎就可以找出文件的內容含意

如下左圖是瀏覽軟體看到文件的樣子, 右圖是人類看到文件的樣子

現在這些技術就是要把上面左右圖都讓電腦看清楚,並瞭解這些內容到底是什麼

如上圖紅色部分就是RDFa(RDF-in-attribute)的描述, RDFa可以看成是RDF的簡易版, 以便跟目前的XHTML一起使用, dc就是都柏林碼(Dublin Code)…如此就可以知道”The trouble with Bob”是主題, 作者是Alice

OK…那什麼又是Common Tag (通用標籤)?

Common Tag就是使用RDFa的架構, 讓一些已經被定義的常用標籤可以拿來定義你的內容如Commontag.org所說:

Unlike free-text tags, Common Tags are references to unique, well-defined concepts, complete with metadata and their own URLs. With Common Tag, site owners can more easily create topic hubs, cross-promote their content, and enrich their pages with free data, images and widgets.

這 個Common Tag不是free-text tags, 你必須找到可以描述你的內容, 並且已經定義好的, 才能使用來描述你的文件, 架構如下: 透過如AdaptiveBlue, DERI (NUI Galway), Faviki, Freebase, Yahoo!, Zemanta, , Zigtag … 這些公司來開發可以制定tag的平台來定義

例如下面就透過Common Tag來定義圖片是: 鳳凰號的火星任務

這 些描述的方式暫時不會對SEO產生太大的影響, 但是慢慢影響會越來越大, 沒有清楚描述的資料, 搜尋引擎就無法抓到文件的真正意思, 僅能用字面意義去分析, 看到「王先生」並不會知道他與「張先生」都是「藝術家」, 只認得他是一位「先生」, 姓「王」而已 … 更可能只是「王先生」三個字, 對搜尋引擎完全沒有任何意義!

這個也就是上篇”SEO SEM 的未來 : 3.0的到來” 所說的未來的SEO將不再只是簡單幾個外部聯結可以搞定, 現在的SEO廠商靠著人力密集建立了大量的部落格, 然後複製一堆五花八門的內容, 再指回自己操作的網站, 仔細看的話, 可以發現都是不相關的內容胡亂連結, 這樣的作法只是製造一堆網路垃圾罷了, 能夠有效多久就不得而知了 …

其他更詳細的參考

ReadWriteWeb: Common Tag Bring Standards to Metadata

Freebase

DBPedia

Intruduce Rich Snippets

Tuesday, May 12, 2009 at 12:00 PM

Webmaster Level: All

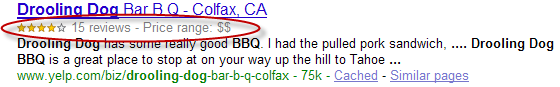

As a webmaster, you have a unique understanding of your web pages and the content they represent. Google helps users find your page by showing them a small sample of that content — the “snippet.” We use a variety of techniques to create these snippets and give users relevant information about what they’ll find when they click through to visit your site. Today, we’re announcing Rich Snippets, a new presentation of snippets that applies Google’s algorithms to highlight structured data embedded in web pages.

Rich Snippets give users convenient summary information about their search results at a glance. We are currently supporting data about reviews and people. When searching for a product or service, users can easily see reviews and ratings, and when searching for a person, they’ll get help distinguishing between people with the same name. It’s a simple change to the display of search results, yet our experiments have shown that users find the new data valuable — if they see useful and relevant information from the page, they are more likely to click through. Now we’re beginning the process of opening up this successful experiment so that more websites can participate. As a webmaster, you can help by annotating your pages with structured data in a standard format.

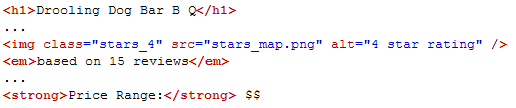

To display Rich Snippets, Google looks for markup formats (microformats and RDFa) that you can easily add to your own web pages. In most cases, it’s as quick as wrapping the existing data on your web pages with some additional tags. For example, here are a few relevant lines of the HTML from Yelp’s review page for “Drooling Dog BarBQ” before adding markup data:

and now with microformats markup:

or alternatively, use RDFa markup. Either format works:

By incorporating standard annotations in your pages, you not only make your structured data available for Google’s search results, but also for any service or tool that supports the same standard. As structured data becomes more widespread on the web, we expect to find many new applications for it, and we’re excited about the possibilities.

To ensure that this additional data is as helpful as possible to users, we’ll be rolling this feature out gradually, expanding coverage to more sites as we do more experiments and process feedback from webmasters. We will make our best efforts to monitor and analyze whether individual websites are abusing this system: if we see abuse, we will respond accordingly.

To prepare your site for Rich Snippets and other benefits of structured data on the web, please see our documentation on structured data annotations.

Now, time for some Q&A with the team:

If I mark up my pages, does that guarantee I’ll get Rich Snippets?

No. We will be rolling this out gradually, and as always we will use our own algorithms and policies to determine relevant snippets for users’ queries. We will use structured data when we are able to determine that it helps users find answers sooner. And because you’re providing the data on your pages, you should anticipate that other websites and other tools (browsers, phones) might use this data as well. You can let us know that you’re interested in participating by filling out this form.

What about other existing microformats? Will you support other types of information besides reviews and people?

Not every microformat corresponds to data that’s useful to show in a search result, but we do plan to support more of the existing microformats and define RDFa equivalents.

What’s next?

We’ll be continuing experiments with new types (beyond reviews and people) and hope to announce support for more types in the future.

I have too much data on my page to mark it all up.

That wasn’t a question, but we’ll answer anyway. For the purpose of getting data into snippets, we don’t need every bit of data: it simply wouldn’t fit. For example, a page that says it has “497 reviews” of a product probably has data for 10 and links to the others. Even if you could mark up all 497 blocks of data, there is no way we could fit it into a single snippet. To make your part of this grand experiment easier, we have defined aggregate types where necessary: a review-aggregate can be used to summarize all the review information (review count, average/min/max rating, etc.).

Why do you support multiple encodings?

A lot of previous work on structured data has focused on debates around encoding. Even within Google, we have advocates for microformat encoding, advocates for various RDF encodings, and advocates for our own encodings. But after working on this Rich Snippets project for a while, we realized that structured data on the web can and should accommodate multiple encodings: we hope to emphasize this by accepting both microformat encoding and RDFa encoding. Each encoding has its pluses and minuses, and the debate is a fine intellectual exercise, but it detracts from the real issues.

We do believe that it is important to have a common vocabulary: the language of object types, object properties, and property types that enable structured data to be understood by different applications. We debated how to address this vocabulary problem, and concluded that we needed to make an investment. Google will, working together with others, host a vocabulary that various Google services and other websites can use. We are starting with a small list, which we hope to extend over time.

Wherever possible, we’ll simply reuse vocabulary that is in wide use: we support the pre-existing vCard and hReview types, and there are a variety of other types defined by various communities. Sites that use Google Custom Search will be able to define their own types, which we will index and present to users in rich Custom Search results pages. Finally, we encourage and expect this space to evolve based on new ideas from the structured data community. We’ll notice and reach out when our crawlers pick up new types that are getting broad use.

Written by Kavi Goel, Ramanathan V. Guha, and Othar Hansson

http://googlewebmastercentral.blogspot.com/2009/05/introducing-rich-snippets.html